Wow Server Blade Be Used Again

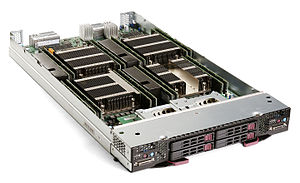

Supermicro SBI-7228R-T2X blade server, containing two dual-CPU server nodes

A bract server is a stripped-down server computer with a modular design optimized to minimize the use of physical space and energy. Blade servers have many components removed to save space, minimize power consumption and other considerations, while even so having all the functional components to be considered a computer.[1] Unlike a rack-mount server, a blade server fits within a blade enclosure, which can hold multiple blade servers, providing services such as power, cooling, networking, various interconnects and management. Together, blades and the bract enclosure form a blade organization, which may itself be rack-mounted. Different blade providers take differing principles regarding what to include in the blade itself, and in the blade system as a whole.

In a standard server-rack configuration, i rack unit or 1U—19 inches (480 mm) wide and 1.75 inches (44 mm) tall—defines the minimum possible size of any equipment. The primary benefit and justification of blade computing relates to lifting this restriction so as to reduce size requirements. The almost common computer rack form-factor is 42U high, which limits the number of discrete calculator devices directly mountable in a rack to 42 components. Blades do not take this limitation. As of 2014[update], densities of up to 180 servers per bract system (or 1440 servers per rack) are achievable with blade systems.[ii]

Bract enclosure [edit]

Enclosure (or chassis) performs many of the non-core calculating services found in nearly computers. Non-blade systems typically utilise bulky, hot and space-inefficient components, and may duplicate these across many computers that may or may not perform at chapters. By locating these services in one place and sharing them amongst the blade computers, the overall utilization becomes higher. The specifics of which services are provided varies by vendor.

HP BladeSystem c7000 enclosure (populated with 16 blades), with two 3U UPS units below

Power [edit]

Computers operate over a range of DC voltages, but utilities deliver power as AC, and at higher voltages than required within computers. Converting this electric current requires i or more ability supply units (or PSUs). To ensure that the failure of one power source does non touch the operation of the calculator, fifty-fifty entry-level servers may take redundant power supplies, again calculation to the bulk and heat output of the pattern.

The bract enclosure's power supply provides a single ability source for all blades inside the enclosure. This single power source may come up as a ability supply in the enclosure or as a defended separate PSU supplying DC to multiple enclosures.[three] [4] This setup reduces the number of PSUs required to provide a resilient power supply.

The popularity of blade servers, and their own ambition for ability, has led to an increase in the number of rack-mountable uninterruptible ability supply (or UPS) units, including units targeted specifically towards blade servers (such as the BladeUPS).

Cooling [edit]

During functioning, electrical and mechanical components produce rut, which a system must misemploy to ensure the proper functioning of its components. Most blade enclosures, like most computing systems, remove oestrus past using fans.

A ofttimes underestimated problem when designing loftier-performance computer systems involves the conflict betwixt the amount of heat a system generates and the power of its fans to remove the rut. The blade'due south shared power and cooling means that it does not generate as much rut as traditional servers. Newer[update] bract-enclosures feature variable-speed fans and control logic, or even liquid cooling systems[5] [half-dozen] that arrange to meet the system's cooling requirements.

At the same fourth dimension, the increased density of bract-server configurations tin can nonetheless result in higher overall demands for cooling with racks populated at over 50% full. This is especially truthful with early-generation blades. In absolute terms, a fully populated rack of blade servers is probable to require more cooling capacity than a fully populated rack of standard 1U servers. This is considering 1 can fit up to 128 bract servers in the same rack that will only concur 42 1U rack-mount servers.[seven]

Networking [edit]

Blade servers generally include integrated or optional network interface controllers for Ethernet or host adapters for Fibre Channel storage systems or converged network adapter to combine storage and data via one Fibre Aqueduct over Ethernet interface. In many blades, at least ane interface is embedded on the motherboard and extra interfaces tin can exist added using mezzanine cards.

A blade enclosure can provide private external ports to which each network interface on a bract will connect. Alternatively, a blade enclosure tin can amass network interfaces into interconnect devices (such as switches) built into the bract enclosure or in networking blades.[eight] [ix]

Storage [edit]

While computers typically employ hard disks to store operating systems, applications and data, these are not necessarily required locally. Many storage connection methods (due east.1000. FireWire, SATA, E-SATA, SCSI, SAS DAS, FC and iSCSI) are readily moved outside the server, though not all are used in enterprise-level installations. Implementing these connection interfaces inside the computer presents similar challenges to the networking interfaces (indeed iSCSI runs over the network interface), and similarly these can be removed from the bract and presented individually or aggregated either on the chassis or through other blades.

The power to kicking the blade from a storage expanse network (SAN) allows for an entirely disk-complimentary blade, an example of which implementation is the Intel Modular Server Organisation.

Other blades [edit]

Since blade enclosures provide a standard method for delivering bones services to computer devices, other types of devices tin also utilize blade enclosures. Blades providing switching, routing, storage, SAN and fibre-channel access can slot into the enclosure to provide these services to all members of the enclosure.

Systems administrators tin can use storage blades where a requirement exists for boosted local storage.[10] [11] [12]

Uses [edit]

Cray XC40 supercomputer chiffonier with 48 blades, each containing 4 nodes with ii CPUs each

Blade servers function well for specific purposes such every bit spider web hosting, virtualization, and cluster computing. Individual blades are typically hot-swappable. As users deal with larger and more diverse workloads, they add together more than processing power, memory and I/O bandwidth to blade servers. Although blade-server technology in theory allows for open, cross-vendor systems, most users purchase modules, enclosures, racks and direction tools from the same vendor.

Eventual standardization of the technology might result in more choices for consumers;[13] [fourteen] as of 2009[update] increasing numbers of third-party software vendors have started to enter this growing field.[15]

Bract servers do not, nonetheless, provide the respond to every computing problem. One can view them equally a form of productized server-farm that borrows from mainframe packaging, cooling, and power-supply engineering. Very large computing tasks may still require server farms of blade servers, and considering of bract servers' high power density, can suffer even more acutely from the heating, ventilation, and air conditioning problems that bear upon big conventional server farms.

History [edit]

Developers first placed complete microcomputers on cards and packaged them in standard 19-inch racks in the 1970s, soon after the introduction of 8-bit microprocessors. This architecture was used in the industrial procedure control industry as an culling to minicomputer-based command systems. Early models stored programs in EPROM and were express to a single function with a pocket-sized real-fourth dimension executive.

The VMEbus architecture (c. 1981) defined a computer interface that included implementation of a board-level reckoner installed in a chassis backplane with multiple slots for pluggable boards to provide I/O, memory, or additional calculating.

In the 1990s, the PCI Industrial Computer Manufacturers Group PICMG adult a chassis/blade structure for the then emerging Peripheral Component Interconnect bus PCI called CompactPCI. CompactPCI was really invented by Ziatech Corp of San Luis Obispo, CA and developed into an manufacture standard. Common among these chassis-based computers was the fact that the unabridged chassis was a unmarried arrangement. While a chassis might include multiple computing elements to provide the desired level of performance and back-up, there was e'er one master board in charge, or 2 redundant fail-over masters analogous the functioning of the entire system. Moreover this organisation architecture provided direction capabilities not nowadays in typical rack mountain computers, much more like in ultra-high reliability systems, managing power supplies, cooling fans too every bit monitoring health of other internal components.

Demands for managing hundreds and thousands of servers in the emerging Internet Data Centers where the manpower but didn't exist to keep stride a new server architecture was needed. In 1998 and 1999 this new Blade Server Architecture was developed at Ziatech based on their Compact PCI platform to house as many as fourteen "blade servers" in a standard 19" 9U loftier rack mounted chassis, allowing in this configuration as many as 84 servers in a standard 84 Rack Unit xix" rack. What this new architecture brought to the tabular array was a set of new interfaces to the hardware specifically to provide the adequacy to remotely monitor the health and performance of all major replaceable modules that could be inverse/replaced while the system was in operation. The ability to alter/replace or add modules within the system while it is in operation is known equally Hot-Swap. Unique to whatever other server system the Ketris Bract servers routed Ethernet across the backplane (where server blades would plug-in) eliminating more than than 160 cables in a single 84 Rack Unit high 19" rack. For a large data center tens of thousands of Ethernet cables, prone to failure would be eliminated. Further this compages provided the capabilities to inventory modules installed in the arrangement remotely in each system chassis without the blade servers operating. This architecture enabled the ability to provision (power up, install operating systems and applications software) (e.m. a Spider web Servers) remotely from a Network Operations Center (NOC). The system architecture when this system was announced was called Ketris, named after the Ketri Sword, worn by nomads in such a way as to exist fatigued very speedily as needed. First envisioned past Dave Lesser and developed by an engineering team at Ziatech Corp in 1999 and demonstrated at the Networld+Interop evidence in May 2000. Patents were awarded for the Ketris blade server architecture[ citation needed ]. In October 2000 Ziatech was acquired past Intel Corp and the Ketris Blade Server systems would become a product of the Intel Network Products Group.[ citation needed ]

PICMG expanded the CompactPCI specification with the apply of standard Ethernet connectivity between boards across the backplane. The PICMG ii.xvi CompactPCI Bundle Switching Backplane specification was adopted in Sept 2001.[16] This provided the beginning open architecture for a multi-server chassis.

The 2d generation of Ketris would be developed at Intel as an compages for the telecommunication manufacture to support the build out of IP base telecom services and in particular the LTE (Long Term Development) Cellular Network build-out. PICMG followed with this larger and more feature-rich AdvancedTCA specification, targeting the telecom industry's need for a high availability and dense computing platform with extended product life (10+ years). While AdvancedTCA system and boards typically sell for higher prices than blade servers, the operating toll (manpower to manage and maintain) are dramatically lower, where operating cost often dwarf the acquisition cost for traditional servers. AdvancedTCA promote them for telecommunications customers, notwithstanding in the real world implementation in Internet Data Centers where thermal also as other maintenance and operating cost had become prohibitively expensive, this blade server architecture with remote automatic provisioning, health and operation monitoring and management would be a significantly less expensive operating cost.[ clarification needed ]

The get-go commercialized blade-server architecture[ commendation needed ] was invented by Christopher Hipp and David Kirkeby, and their patent was assigned to Houston-based RLX Technologies.[17] RLX, which consisted primarily of former Compaq Computer Corporation employees, including Hipp and Kirkeby, shipped its get-go commercial blade server in 2001.[eighteen] RLX was caused by Hewlett Packard in 2005.[19]

The name blade server appeared when a card included the processor, memory, I/O and non-volatile program storage (wink memory or modest hard deejay(s)). This allowed manufacturers to bundle a complete server, with its operating system and applications, on a single carte/board/blade. These blades could then operate independently within a common chassis, doing the piece of work of multiple split server boxes more efficiently. In addition to the most obvious benefit of this packaging (less space consumption), boosted efficiency benefits accept go clear in power, cooling, management, and networking due to the pooling or sharing of common infrastructure to support the entire chassis, rather than providing each of these on a per server box basis.

In 2011, enquiry firm IDC identified the major players in the blade market every bit HP, IBM, Cisco, and Dell.[twenty] Other companies selling blade servers include Supermicro, Hitachi.

Bract models [edit]

Cisco UCS bract servers in a chassis

The prominent brands in the blade server market place are Supermicro, Cisco Systems, HPE, Dell and IBM, though the latter sold its x86 server business to Lenovo in 2014 subsequently selling its consumer PC line to Lenovo in 2005.[21]

In 2009, Cisco appear blades in its Unified Computing System product line, consisting of 6U high chassis, up to 8 blade servers in each chassis. Information technology had a heavily modified Nexus 5K switch, rebranded as a fabric interconnect, and management software for the whole organization.[22] HP'south initial line consisted of ii chassis models, the c3000 which holds up to viii one-half-height ProLiant line blades (besides available in tower form), and the c7000 (10U) which holds up to sixteen half-summit ProLiant blades. Dell's product, the M1000e is a 10U modular enclosure and holds upward to 16 half-peak PowerEdge blade servers or 32 quarter-height blades.

Come across as well [edit]

- Bract PC

- HP BladeSystem

- Mobile PCI Express Module (MXM)

- Modular crate electronics

- Multibus

- Server computer

References [edit]

- ^ "Data Center Networking – Connectivity and Topology Blueprint Guide" (PDF). Enterasys Networks, Inc. 2011. Archived from the original (PDF) on 2013-10-05. Retrieved 2013-09-05 .

- ^ "HP updates Moonshot server platform with ARM and AMD Opteron hardware". Incisive Business concern Media Limited. 9 Dec 2013. Archived from the original on 16 April 2014. Retrieved 2014-04-25 .

- ^ "HP BladeSystem p-Class Infrastructure". Archived from the original on 2006-05-18. Retrieved 2006-06-09 .

- ^ Sun Blade Modular System

- ^ Sun Power and Cooling

- ^ HP Thermal Logic technology

- ^ "HP BL2x220c". Archived from the original on 2008-08-29. Retrieved 2008-08-21 .

- ^ Lord's day Independent I/O

- ^ HP Virtual Connect

- ^ IBM BladeCenter HS21 Archived October 13, 2007, at the Wayback Machine

- ^ "HP storage blade". Archived from the original on 2007-04-30. Retrieved 2007-04-18 .

- ^ Verari Storage Blade

- ^ http://world wide web.techspot.com/news/26376-intel-endorses-industrystandard-blade-blueprint.html TechSpot

- ^ http://news.cnet.com/2100-1010_3-5072603.htmlCNET Archived 2011-12-26 at the Wayback Machine

- ^ https://www.theregister.co.united kingdom/2009/04/07/ssi_blade_specs/ The Annals

- ^ PICMG specifications Archived 2007-01-09 at the Wayback Automobile

- ^ US 6411506, Hipp, Christopher & Kirkeby, David, "High density web server chassis system and method", published 2002-06-25, assigned to RLX Technologies

- ^ "RLX helps data centres with switch to blades". ARN. October viii, 2001. Retrieved 2011-07-30 .

- ^ "HP Volition Acquire RLX To Eternalize Blades". www.informationweek.com. October three, 2005. Archived from the original on January three, 2013. Retrieved 2009-07-24 .

- ^ "Worldwide Server Marketplace Revenues Increase 12.one% in Get-go Quarter as Market Demand Continues to Meliorate, According to IDC" (Press release). IDC. 2011-05-24. Archived from the original on 2011-05-26. Retrieved 2015-03-20 .

- ^ "Transitioning x86 to Lenovo". IBM.com . Retrieved 27 September 2014.

- ^ "Cisco Unleashes the Power of Virtualization with Industry'south Start Unified Computing System". Press release. March 16, 2009. Archived from the original on March 21, 2009. Retrieved March 27, 2017.

External links [edit]

- BladeCenter blade servers – x86 processor-based servers

..............................................................................................................................................................................................

chamblissclate1979.blogspot.com

Source: https://en.wikipedia.org/wiki/Blade_server

0 Response to "Wow Server Blade Be Used Again"

Postar um comentário